ETH AI Digest: #23

Physical neural networks achieve universal learning, robots see targets not locations, VLMs stumbles on icon-based math

In this week’s digest:

Universal Physical Neural Networks — Mathematical proof establishes when physical systems can learn arbitrary functions, demonstrated with free-space optical hardware achieving high accuracy on image classification

Perception-Aware Robot Inspection — RL framework optimizes for target visibility rather than proximity, finding shorter paths than navigation methods and transferring successfully to Boston Dynamics Spot

Vision-Language Models Fail Visual Math — Systematic analysis reveals visual counting as the critical bottleneck when AI solves equations with icon-based variables, exposing fundamental reasoning gaps

Selected Papers of the Week

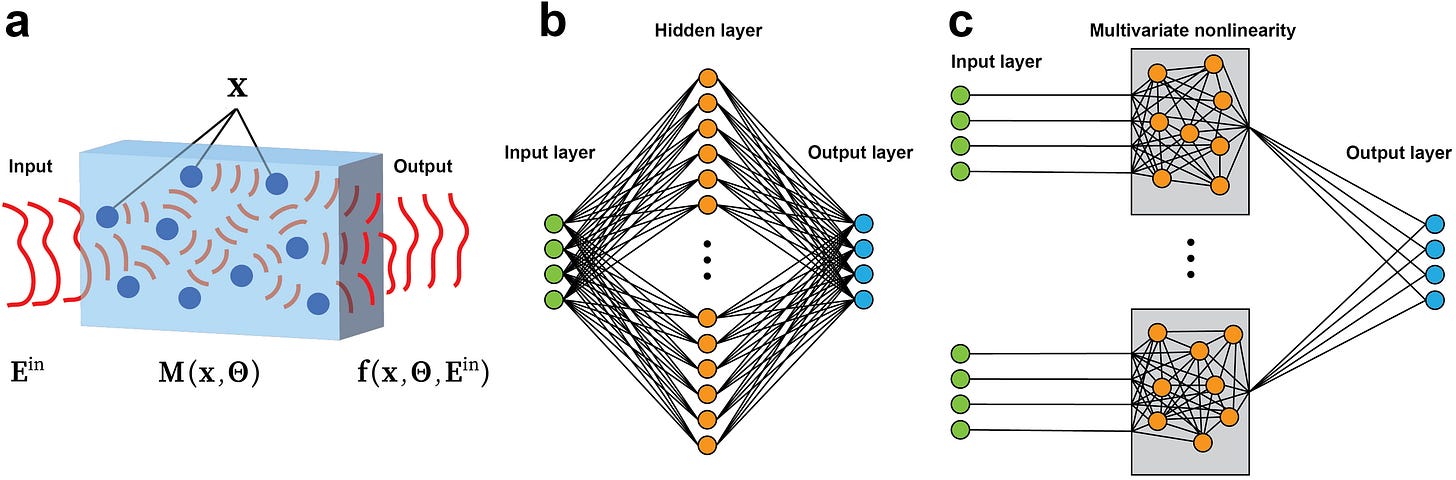

1. Universality of physical neural networks with multivariate nonlinearity

Proving when physical systems can learn anything: a foundation for energy-efficient AI hardware.

✍️ Authors: Benjamin Savinson, David J. Norris, Siddhartha Mishra, Samuel Lanthaler

🏛️ Lab: Seminar for Applied Mathematics

⚡ Summary

This paper establishes when physical neural networks can learn arbitrary relationships between data through a mathematical universality theorem.

The authors introduce “multivariate nonlinearity,” where nonlinear functions mix input components, and design a free-space optical system that satisfies their universality criterion.

Their system achieves high accuracy on image classification tasks and scales with system size, validating their theoretical predictions.

By proposing both spatial and temporal scaling approaches, they provide a path to universal, energy-efficient AI hardware beyond optical systems.

2. Sight Over Site: Perception-Aware Reinforcement Learning for Efficient Robotic Inspection

Teaching robots to see targets efficiently instead of just reaching their locations.

✍️ Authors: Richard Kuhlmann, Jakob Wolfram, Boyang Sun, Jiaxu Xing, Davide Scaramuzza, Marc Pollefeys, Cesar Cadena

🏛️ Lab: Computer Vision and Geometry Group, Robotics Systems Lab

⚡ Summary

Traditional robotic inspection approaches focus on navigating to target locations, often resulting in inefficient paths when visibility is the true objective.

This paper introduces a perception-aware reinforcement learning framework that explicitly optimizes for target visibility rather than physical proximity.

Trained entirely in simulation without maps, the approach finds shorter inspection paths than state-of-the-art navigation methods in both simulated and real-world tests.

The system successfully transfers to a Boston Dynamics Spot robot, demonstrating practical applications for industrial monitoring and search-and-rescue operations.

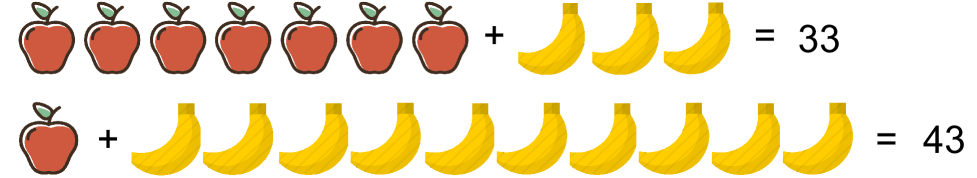

3. Can Vision-Language Models Solve Visual Math Equations?

When icons replace numbers: Why advanced AI models struggle with visual equation solving.

✍️ Authors: Monjoy Narayan Choudhury, Junling Wang, Yifan Hou, Mrinmaya Sachan

🏛️ Lab: Language, Reasoning and Education Lab

⚡ Summary

This paper investigates why Vision-Language Models struggle with visual equation solving, where variables are represented by icons and coefficients determined by counting.

Through systematic decomposition of the task, researchers identify visual counting as the primary bottleneck, even when models can recognize variables and solve symbolic equations perfectly.

Performance further degrades when models must compose multiple abilities or tackle more complex equations, revealing fundamental limitations in integrating visual perception with mathematical reasoning.

These findings highlight critical gaps in current AI systems and provide direction for improving grounded, multi-step reasoning capabilities.

Other noteworthy articles

Chimera: Diagnosing Shortcut Learning in Visual-Language Understanding: CHIMERA reveals how vision-language models cheat at diagram comprehension through language shortcuts

Bridging the Gap Between Promise and Performance for Microscaling FP4 Quantization: Unlocking the potential of new 4-bit formats with specialized algorithms for efficient AI deployment

Beyond Outliers: A Study of Optimizers Under Quantization: Beyond outliers: How optimizer selection affects model robustness during quantization