ETH AI Digest: #17

10x faster 3D human modeling, bias-free text embeddings via linear transformations, and lattice theory unlocks efficient LLM compression

In this week's digest:

Supercharged 3D Body Modeling — New neural body model achieves 10× faster processing and 6× lower memory usage while enabling realistic human-environment interactions.

Breaking Free from Language Bias — Simple linear transformations eliminate source and language bias in text embeddings while preserving semantic meaning.

Lattice Theory Meets LLMs — Researchers discover GPTQ's hidden connection to classical mathematics, opening new paths for efficient language model deployment.

Selected Papers of the Week

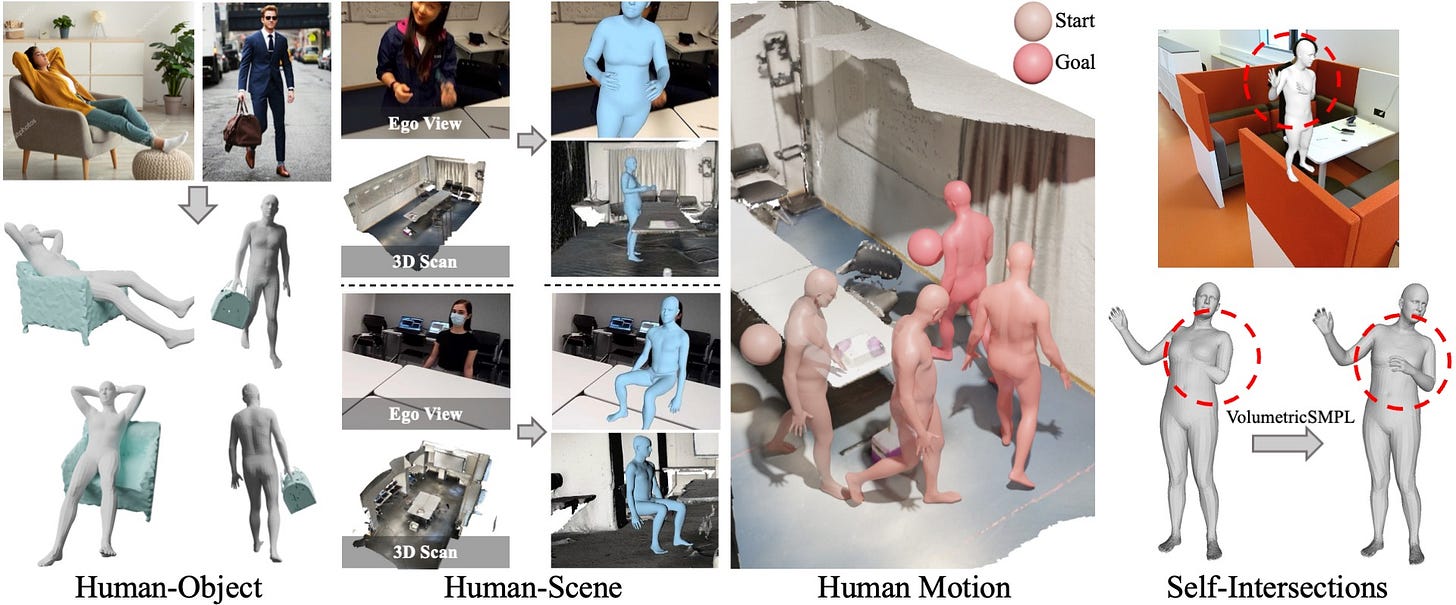

1. VolumetricSMPL: A Neural Volumetric Body Model for Efficient Interactions, Contacts, and Collisions

10× faster, 6× less memory: Neural Blend Weights enable efficient human-environment interactions in 3D.

✍️ Authors: Marko Mihajlovic, Siwei Zhang, Gen Li, Kaifeng Zhao, Lea Müller, Siyu Tang

🏛️ Lab: Computer Vision and Learning Group

⚡ Summary

VolumetricSMPL addresses computational inefficiencies in existing volumetric human body models by introducing Neural Blend Weights that generate compact MLP decoders.

This approach achieves 10× faster inference and 6× lower GPU memory usage than prior work while representing bodies as Signed Distance Fields for smoother gradients.

Applications demonstrate dramatic efficiency improvements: 500× faster human-object reconstruction, 3× faster human mesh recovery, and 7× faster motion synthesis.

The model enables more realistic human interactions with environments, objects, and scenes while maintaining high accuracy.

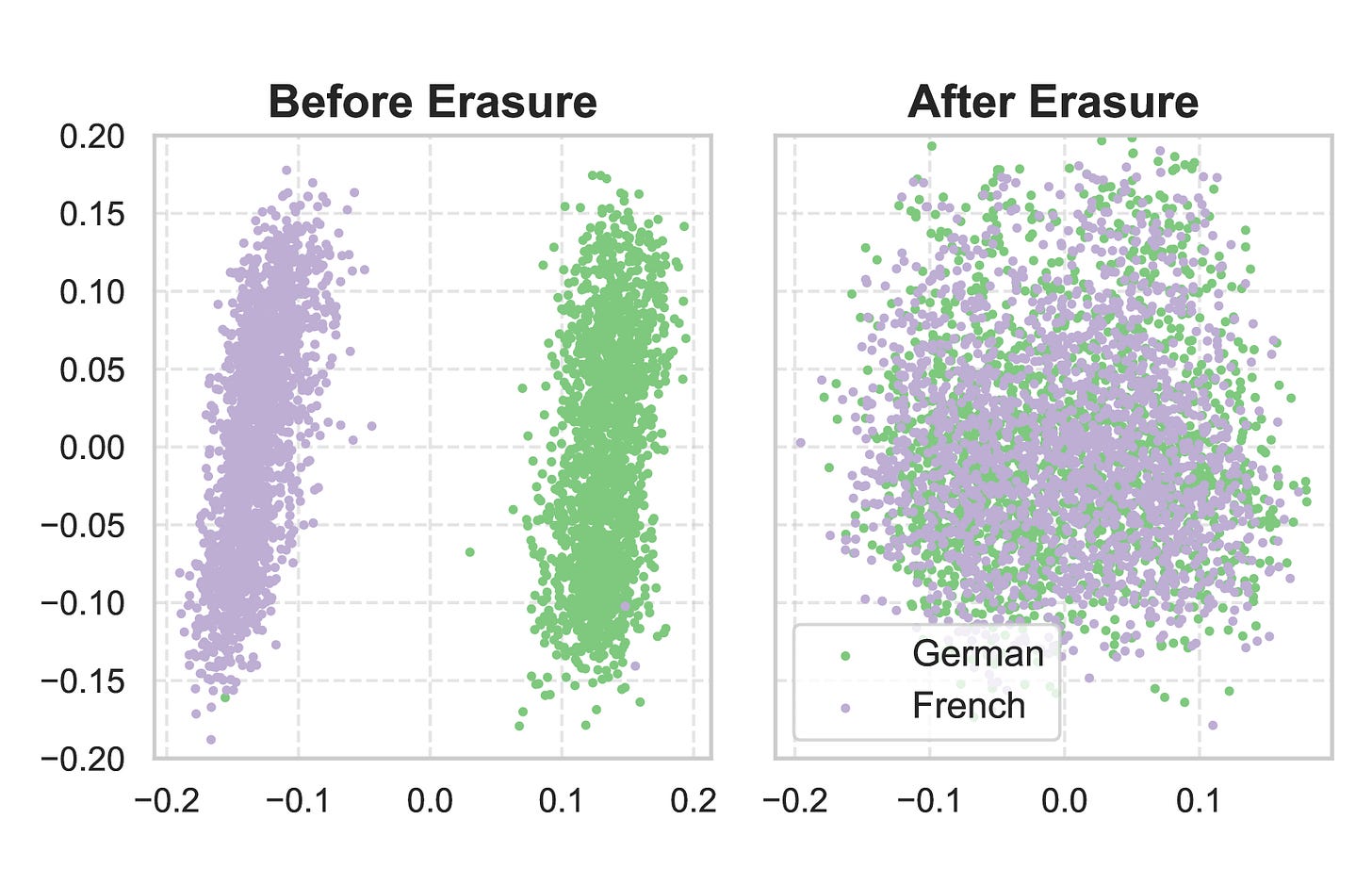

2. The Medium Is Not the Message: Deconfounding Text Embeddings via Linear Concept Erasure

Linear concept erasure eliminates source and language bias in text embeddings without degrading performance.

✍️ Authors: Yu Fan, Yang Tian, Shauli Ravfogel, Mrinmaya Sachan, Elliott Ash, Alexander Hoyle

🏛️ Lab: Law, Economics, and Data Science Group, Language, Reasoning and Education Lab

⚡ Summary

Text embeddings often encode confounding information like source or language that distorts document similarity metrics.

This paper demonstrates that applying linear concept erasure (LEACE) to remove these confounders significantly improves clustering and retrieval performance across various embedding models and datasets.

The method involves simple linear transformations on pretrained embeddings, making it computationally inexpensive.

Importantly, the debiased embeddings maintain their quality on out-of-distribution tasks, suggesting the technique preserves useful semantic information.

3. The Geometry of LLM Quantization: GPTQ as Babai's Nearest Plane Algorithm

Connecting LLM quantization to lattice theory reveals GPTQ's hidden geometric meaning and theoretical guarantees.

✍️ Authors: Jiale Chen, Torsten Hoefler, Dan Alistarh

🏛️ Lab: Scalable Parallel Computing Lab

⚡ Summary

This paper reveals that GPTQ, a popular method for quantizing large language models, is mathematically equivalent to Babai's nearest plane algorithm from lattice theory when executed in reverse order.

This connection provides a geometric interpretation of GPTQ's error propagation as orthogonal projections onto successive hyperplanes and establishes formal error bounds for the no-clipping case.

The authors propose a new "min-pivot" quantization ordering that should reduce error and suggest that decades of lattice algorithm research can now be applied to improve LLM quantization.

This theoretical foundation opens a two-way channel between quantization and lattice theory, potentially enabling more efficient deployment of billion-parameter models.

Other noteworthy articles

HIVMedQA: Benchmarking large language models for HIV medical decision support: Evaluating LLMs for HIV care reveals reasoning challenges and surprising performance patterns across model types

Maximizing Prefix-Confidence at Test-Time Efficiently Improves Mathematical Reasoning: Selecting the most promising solution prefix outperforms traditional methods while using less computation

DialogueForge: LLM Simulation of Human-Chatbot Dialogue: Fine-tuning smaller LLMs narrows the gap with larger models in simulating human-chatbot dialogues

Efficient Visual Appearance Optimization by Learning from Prior Preferences: Leveraging prior user preferences to speed up visual design optimization through meta-learning