ETH AI Digest: #15

1000x faster physics simulations, uncertainty-guided RL exploration, interpretable diffusion models

In this week's digest:

1000x Faster Physics Simulation — Neural latent-space technique enables real-time physics simulation on portable devices with unprecedented speed gains.

Strategic AI Exploration — New uncertainty-guided exploration framework dramatically improves sample efficiency in zero-shot reinforcement learning.

Seeing Inside AI Art — B-cos networks make text-to-image diffusion models transparent by revealing how specific words influence generated images.

Selected Papers of the Week

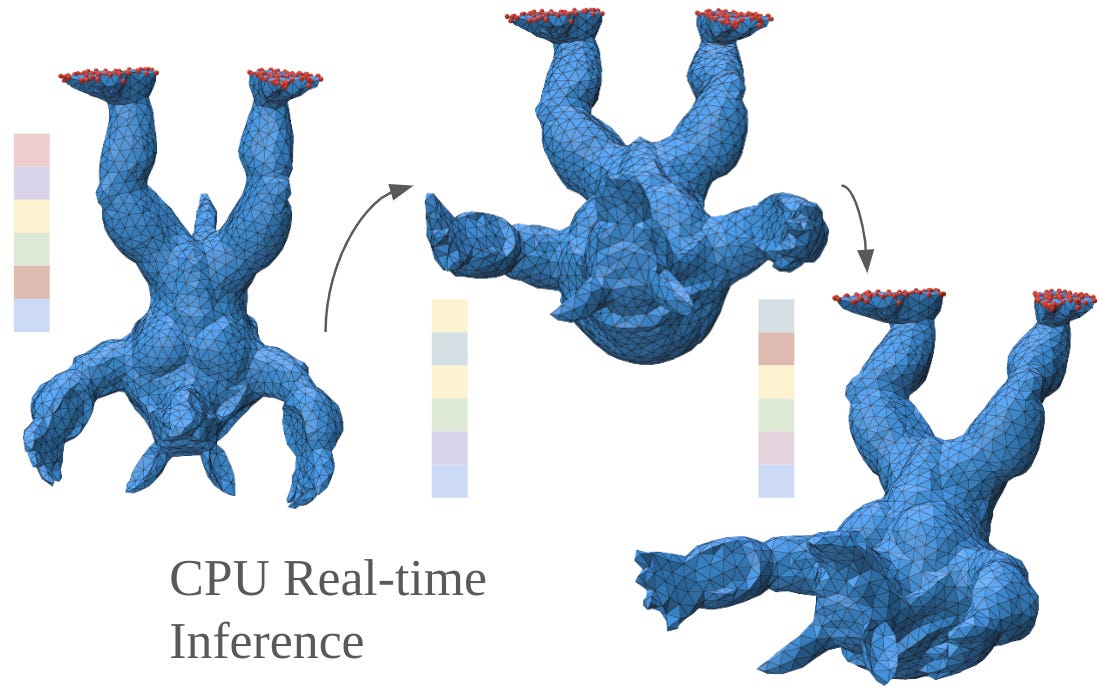

1. Self-supervised Learning of Latent Space Dynamics

Accelerating physics simulation by 1000x through self-supervised learning in latent space.

✍️ Authors: Yue Li, Gene Wei-Chin Lin, Egor Larionov, Aljaz Bozic, Doug Roble, Ladislav Kavan, Stelian Coros, Bernhard Thomaszewski, Tuur Stuyck, Hsiao-yu Chen

🏛️ Lab: Computational Robotics Lab

⚡ Summary

This paper addresses the challenge of simulating deformable objects in real-time on portable devices where computational resources are limited.

The researchers introduce a neural latent-space integrator that operates entirely in a compressed representation, eliminating costly full-space computations.

Their self-supervised learning approach with strategic data augmentation ensures stable, physically plausible simulations for thousands of frames.

The method achieves a 1000x speedup over traditional approaches, enabling CPU real-time performance for complex deformable objects like cloth, rods, and solids.

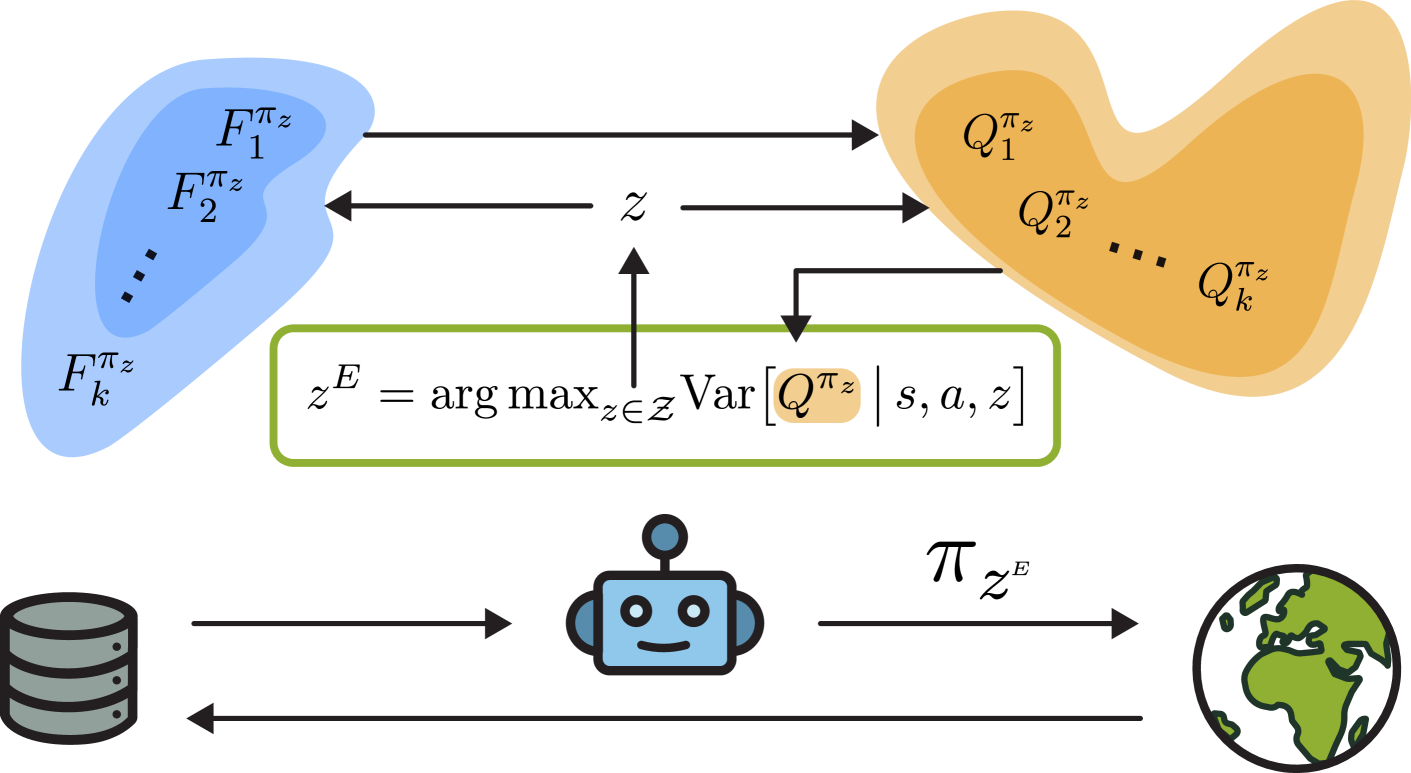

2. Epistemically-guided forward-backward exploration

Using epistemic uncertainty to guide exploration makes forward-backward representations more sample-efficient.

✍️ Authors: Núria Armengol Urpí, Marin Vlastelica, Georg Martius, Stelian Coros

🏛️ Lab: Computational Robotics Lab

⚡ Summary

Zero-shot reinforcement learning aims to learn optimal policies for any reward function, but current methods use exploration strategies disconnected from the learning algorithm.

This paper introduces an epistemically-guided exploration framework for forward-backward representations that uses ensemble disagreement to measure uncertainty and collect the most informative data.

By directing exploration toward states and actions with high predictive uncertainty, the method significantly improves sample efficiency across multiple control tasks.

Experiments show the approach outperforms random exploration and other baselines while maintaining or improving asymptotic performance.

3. Interpretable Diffusion Models with B-cos Networks

Making diffusion models transparent by revealing how prompt tokens influence generated images.

✍️ Authors: Nicola Bernold, Moritz Vandenhirtz, Alice Bizeul, Julia E. Vogt

🏛️ Lab: Medical Data Science Group

⚡ Summary

Text-to-image diffusion models generate impressive images but remain difficult to interpret, making prompt adjustments tedious.

This paper introduces B-cos diffusion models that provide inherent interpretability by showing how individual prompt tokens influence specific regions in generated images.

The approach enables detection of cases where models fail to reflect prompt concepts, with experiments showing that semantically meaningful tokens consistently receive higher relevance scores.

While currently trading some image quality for interpretability, this work represents an important step toward more transparent generative AI.

Other noteworthy articles

BLaST: High Performance Inference and Pretraining using BLock Sparse Transformers: Achieving 95% sparsity with custom kernels delivers 16.7x speedup and 3x memory reduction for LLMs

Learning Steerable Imitation Controllers from Unstructured Animal Motions: Teaching robots to move like animals while responding to user commands through AI-powered motion synthesis

Feature-Based vs. GAN-Based Learning from Demonstrations: When and Why: When to choose explicit or implicit rewards for learning from demonstrations in robotics and animation

Co-DETECT: Collaborative Discovery of Edge Cases in Text Classification: Co-DETECT helps experts improve text classification by automatically identifying ambiguous edge cases