ETH AI Digest: #13

Video-to-3D worlds, lazy learning prevents forgetting, graph databases boost ML accuracy 44%

In this week's digest:

Video-Powered 3D Worlds — AI system generates coherent 3D environments by leveraging video models' spatial understanding to transform text or images into realistic scenes.

The Power of Lazy Learning — Research shows that "lazy" neural networks with intermediate feature learning are better at avoiding catastrophic forgetting during sequential learning.

Next-Gen Graph Intelligence — New database architecture improves machine learning accuracy by 44% through support for complex graph structures like hyperedges.

Selected Papers of the Week

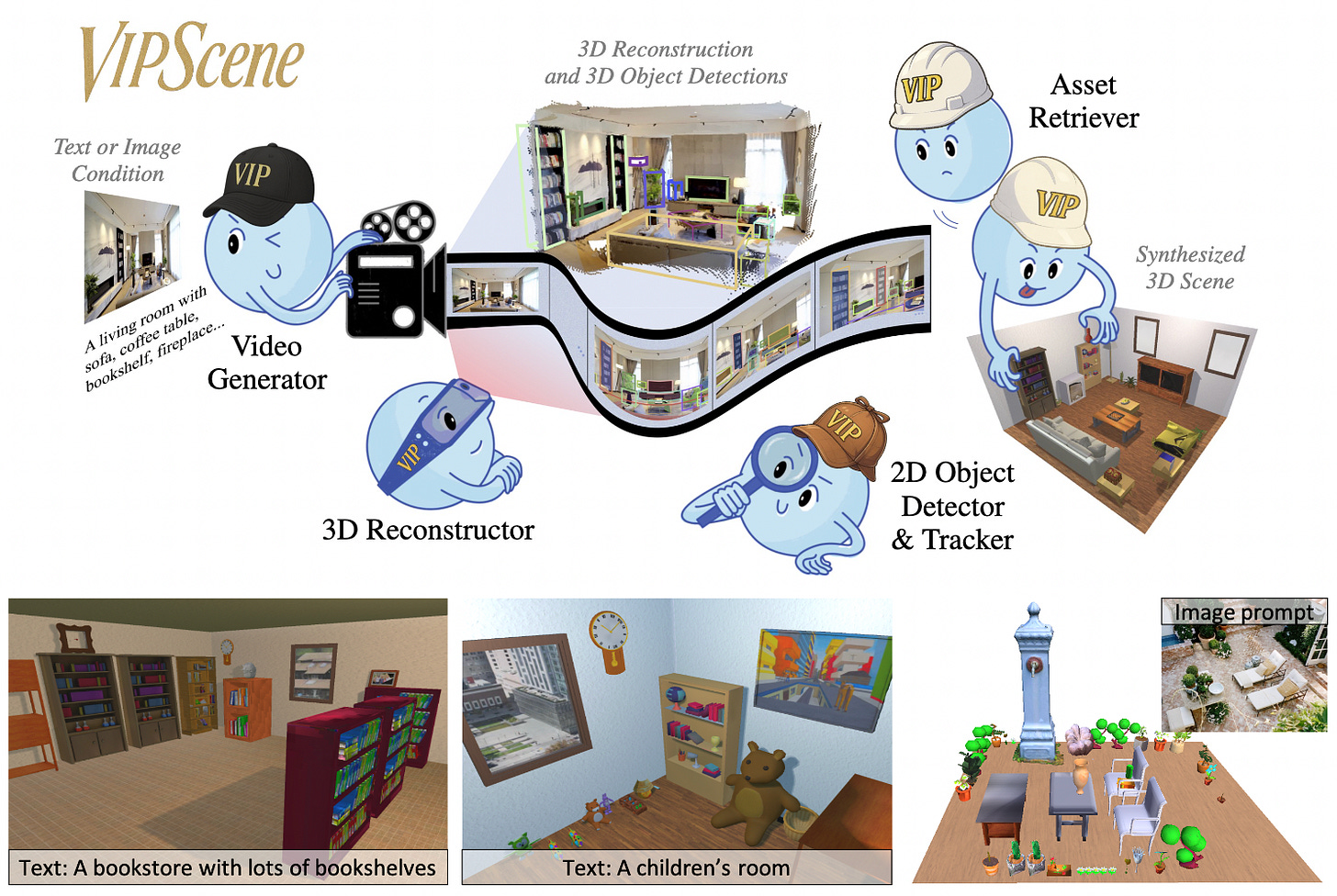

1. Video Perception Models for 3D Scene Synthesis

Leveraging video models' spatial knowledge to generate coherent 3D environments from text or images.

✍️ Authors: Rui Huang, Guangyao Zhai, Zuria Bauer, Marc Pollefeys, Federico Tombari, Leonidas Guibas, Gao Huang, Francis Engelmann

🏛️ Lab: Computer Vision and Geometry Lab

⚡ Summary

Creating realistic 3D scenes traditionally requires expert knowledge, with current AI approaches struggling to produce coherent spatial layouts.

VIPSCENE addresses this by leveraging video generation models' inherent spatial understanding to create consistent 3D environments from text or image prompts.

The system reconstructs scene geometry from generated videos, identifies objects, and replaces them with high-quality 3D assets while ensuring physical plausibility.

The authors also introduce FPVSCORE, a novel evaluation metric using first-person views and multimodal LLMs that better aligns with human judgment than traditional metrics.

2. The Importance of Being Lazy: Scaling Limits of Continual Learning

Why "lazy" neural networks forget less when learning sequentially.

✍️ Authors: Jacopo Graldi, Alessandro Breccia, Giulia Lanzillotta, Thomas Hofmann, Lorenzo Noci

🏛️ Lab: Data Analytics Lab

⚡ Summary

This paper investigates why neural networks struggle with catastrophic forgetting during continual learning, reconciling contradictory findings about the role of model scale.

The authors demonstrate that the degree of feature learning, not scale alone, determines forgetting behavior, with optimal performance achieved at an intermediate level of feature learning.

They identify a non-linear transition between "lazy" and "rich" training regimes that explains when increasing model width helps reduce forgetting.

Their findings reveal that task similarity affects the optimal feature learning level, which transfers across model scales, enabling efficient hyperparameter tuning.

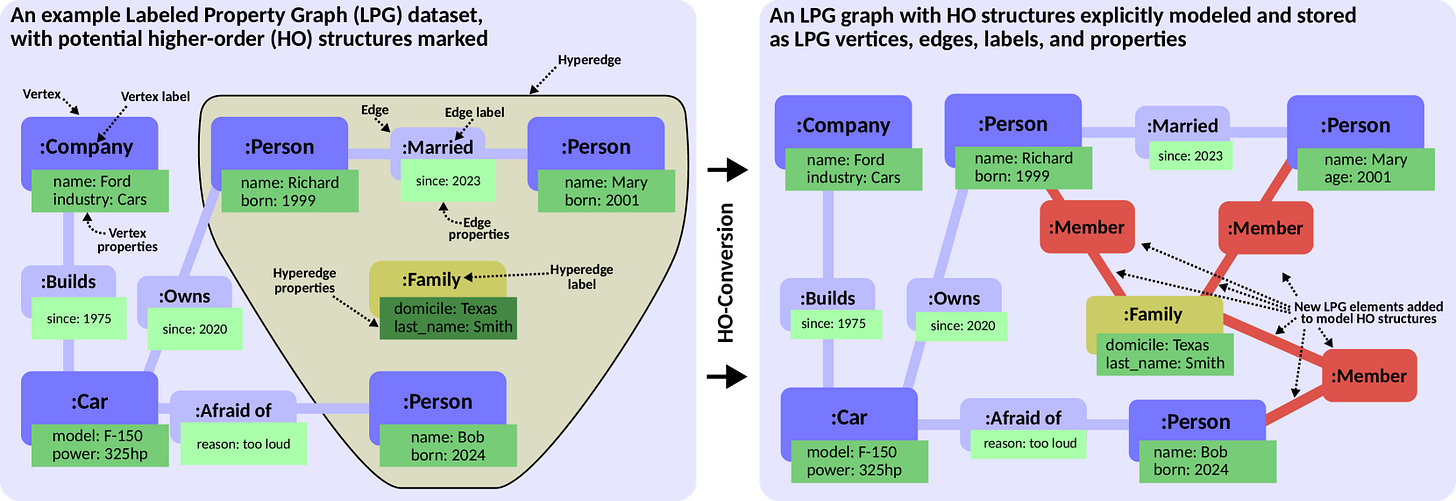

3. Higher-Order Graph Databases

Extending graph databases with higher-order structures improves machine learning accuracy by 44%.

✍️ Authors: Maciej Besta, Shriram Chandran, Jakub Cudak, Patrick Iff, Marcin Copik, Robert Gerstenberger, Tomasz Szydlo, Jürgen Müller, Torsten Hoefler

🏛️ Lab: Scalable Parallel Computing Lab

⚡ Summary

Current graph databases lack support for higher-order structures like hyperedges and subgraphs, limiting their effectiveness for advanced analytics and machine learning.

This paper introduces Higher-Order Graph Databases (HO-GDBs), which represent complex structures as heterogeneous graphs within existing database systems while maintaining ACID properties.

The authors implement a prototype on Neo4j that scales to graphs with hundreds of thousands of entities and demonstrates 44% improved accuracy for graph neural networks.

Their approach bridges the gap between transactional graph storage and higher-order analytics without requiring specialized systems.

Other noteworthy articles

CL-Splats: Continual Learning of Gaussian Splatting with Local Optimization: Updating 3D scenes 75× faster with localized Gaussian Splatting optimization for evolving environments

Improving Large Language Model Safety with Contrastive Representation Learning: Teaching LLMs to distinguish good from harmful using triplet-based contrastive learning