ETH AI Digest: #12

Multimodal Vision Models, Real-Time Hallucination Detection, and Memory-Efficient LLM Training

In this week's digest:

Unified Egocentric Intelligence — Vision model combines video, depth, gaze, and motion data to achieve 30x faster perception while maintaining accuracy.

Efficient Hallucination Detection — New RAUQ system spots LLM inaccuracies by tracking attention patterns with minimal computational overhead.

Smarter Model Optimization — Researchers prove that gradient-free optimization naturally finds better solutions for large language models through flat minima convergence.

Selected Papers of the Week

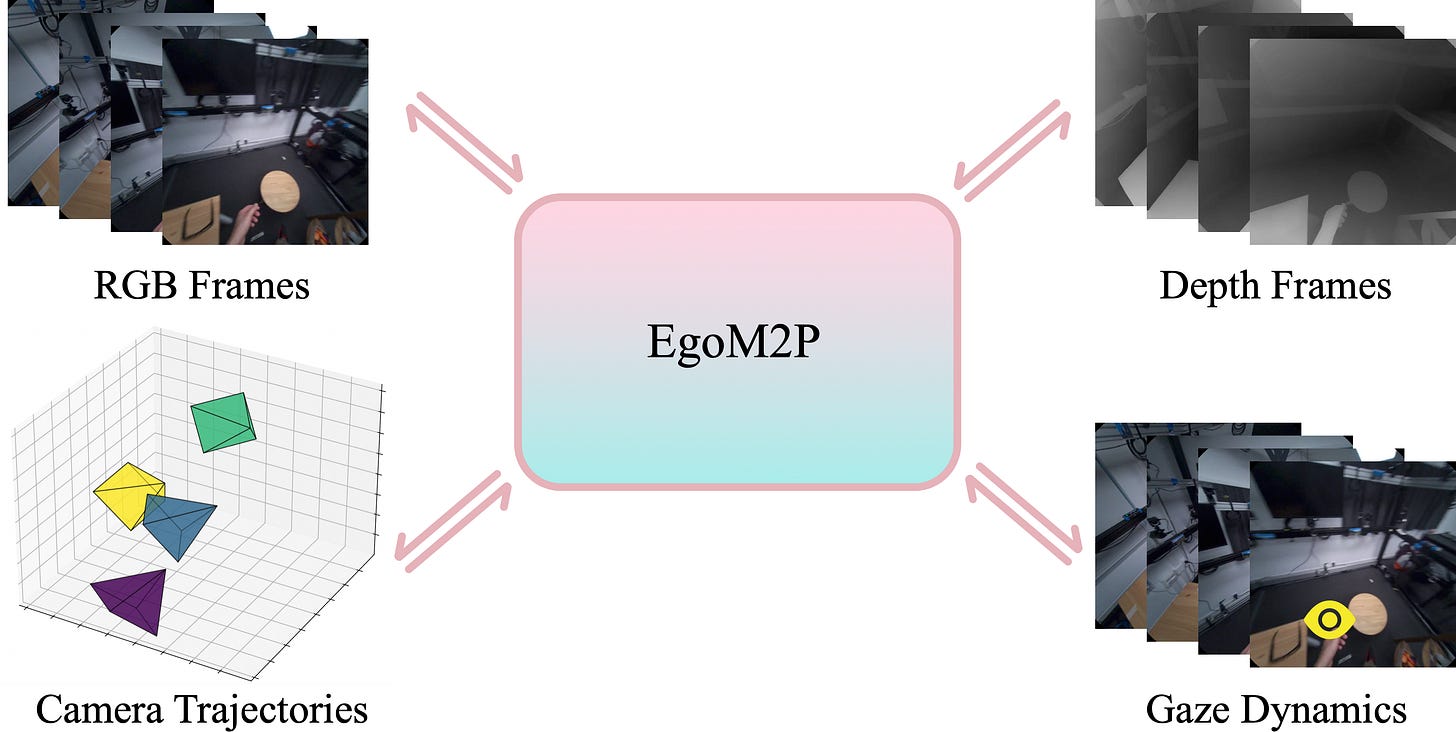

1. EgoM2P: Egocentric Multimodal Multitask Pretraining

First multitask foundation model integrating four modalities for faster, more accurate egocentric perception.

✍️ Authors: Gen Li, Yutong Chen, Yiqian Wu, Kaifeng Zhao, Marc Pollefeys, Siyu Tang

🏛️ Lab: Computer Vision and Geometry Lab, Computer Vision and Learning Group

⚡ Summary

EgoM2P addresses the challenge of building unified models for egocentric vision by integrating RGB video, depth, gaze, and camera trajectories through temporal tokenizers and masked modeling.

The model performs multiple tasks including camera tracking, gaze prediction, depth estimation, and video synthesis, matching or outperforming specialist models while being up to 30x faster.

It effectively handles missing modalities in heterogeneous datasets and demonstrates strong generalization to unseen data, establishing a foundation for applications in augmented reality and robotics.

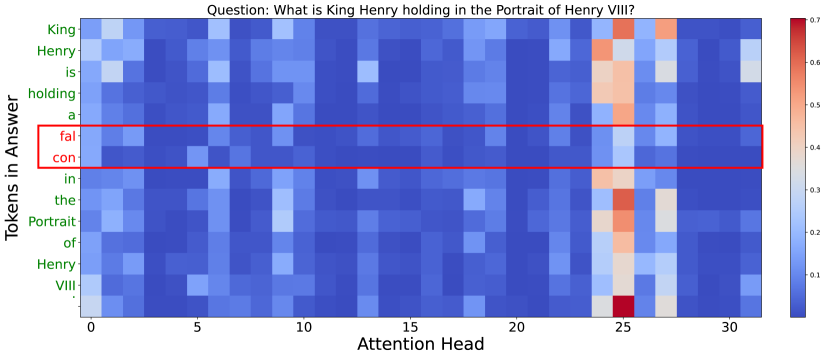

2. Uncertainty-Aware Attention Heads: Efficient Unsupervised Uncertainty Quantification for LLMs

RAUQ: Detecting LLM hallucinations by analyzing attention drops in uncertainty-aware heads.

✍️ Authors: a, Gleb Kuzmin, Ekaterina Fadeeva, Ivan Lazichny, Alexander Panchenko, Maxim Panov, Timothy Baldwin, Mrinmaya Sachan, Preslav Nakov, Artem Shelmanov

🏛️ Lab: Language, Reasoning and Education Lab

⚡ Summary

Large language models often produce fluent but factually incorrect outputs known as hallucinations, creating a need for efficient detection methods.

RAUQ identifies specific "uncertainty-aware" attention heads where drops in attention to preceding tokens signal hallucinations, and uses these patterns to compute uncertainty scores.

This unsupervised approach outperforms existing methods across 12 tasks and 4 LLMs while adding less than 1% computational overhead.

Unlike sampling-based or supervised alternatives, RAUQ requires no task-specific labels and works with a single forward pass, making it practical for real-time applications.

3. Zeroth-Order Optimization Finds Flat Minima

Gradient-free methods implicitly minimize Hessian trace, explaining their success in memory-constrained LLM fine-tuning.

✍️ Authors: Liang Zhang, Bingcong Li, Kiran Koshy Thekumparampil, Sewoong Oh, Michael Muehlebach, Niao He

🏛️ Lab: Optimization & Decision Intelligence Group

⚡ Summary

This paper reveals that zeroth-order optimization methods naturally converge to flat minima without requiring gradients.

The authors prove that the two-point estimator implicitly regularizes the trace of Hessian, providing theoretical convergence guarantees for convex and smooth functions.

Experiments on binary classification and language model fine-tuning confirm that zeroth-order methods consistently reduce Hessian trace compared to gradient descent.

This finding explains why zeroth-order optimization works effectively for fine-tuning large language models despite theoretical dimension-dependent complexity.

Other noteworthy articles

Syntactic Control of Language Models by Posterior Inference: Precise syntactic control of AI text generation through posterior inference and sequential Monte Carlo

MARS: Processing-In-Memory Acceleration of Raw Signal Genome Analysis Inside the Storage Subsystem: Revolutionizing genome analysis by processing raw DNA signals directly inside storage systems

Educators' Perceptions of Large Language Models as Tutors: Comparing Human and AI Tutors in a Blind Text-only Setting: Educators rate AI tutors higher on empathy, scaffolding, and conciseness in blind comparison study

From Problem-Solving to Teaching Problem-Solving: Aligning LLMs with Pedagogy using Reinforcement Learning: Training LLMs to guide students through problems rather than giving away answers