ETH AI Digest: #1

Self-correcting langauge models, robots learning from human videos and benchmarking LLM-generated code

In this week’s digest:

Language Models That Fix Themselves — GIDD introduces a diffusion framework enabling language models to identify and correct their own mistakes without explicit training

Robots Learning from Videos — VidBot transforms everyday human videos into robotic manipulation skills, bridging the gap between human demonstration and robotic execution

The Security Gap in AI-Generated Code — BaxBench reveals critical vulnerabilities in backend code produced by even the most advanced LLMs

Selected Papers of the Week

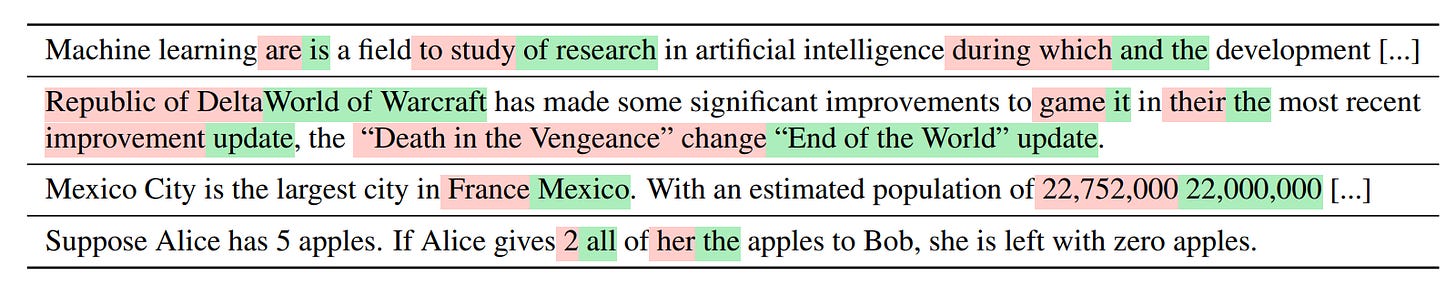

1. Generalized Interpolating Discrete Diffusion

Beyond masked diffusion: this framework enables language models to identify and fix their own mistakes.

✍️ Authors: Dimitri von Rütte, Janis Fluri, Yuhui Ding, Antonio Orvieto, Bernhard Schölkopf, Thomas Hofmann

🏛️ Lab: Data Analytics Lab

⚡ Summary

Language models struggle with revising already generated text, causing errors to persist in the final output.

This paper introduces Generalized Interpolating Discrete Diffusion (GIDD), a framework that extends masked diffusion by allowing flexible combinations of masking and uniform noise during training.

Models trained with GIDD achieve state-of-the-art performance among diffusion language models and, most importantly, develop the ability to identify and correct their own mistakes without explicit supervision.

This self-correction capability addresses a fundamental limitation of autoregressive models and opens new possibilities for more reliable AI-generated content.

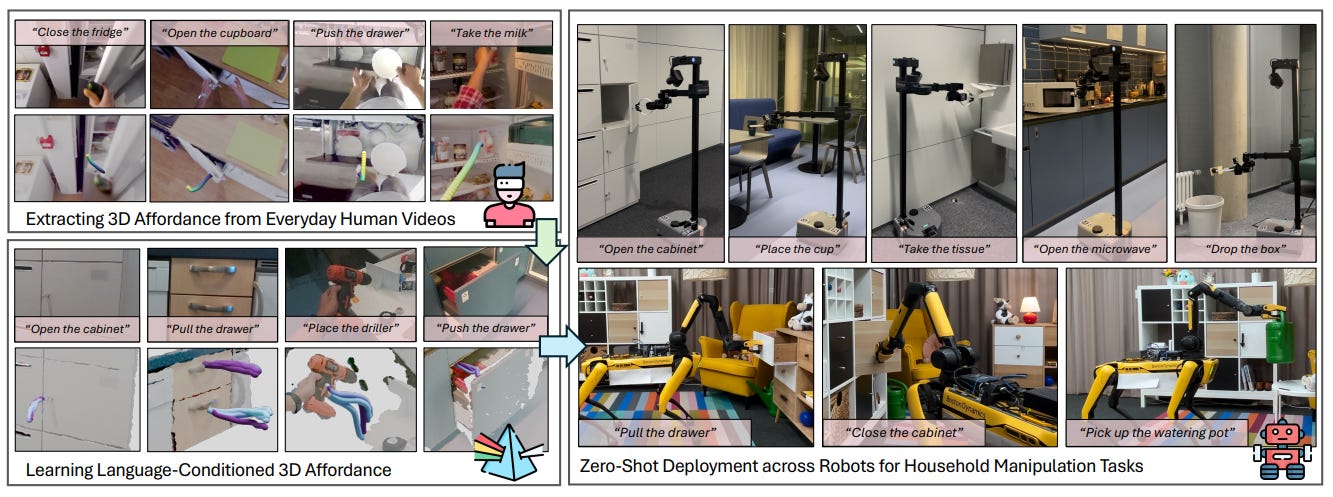

2. VidBot: Learning Generalizable 3D Actions from In-the-Wild 2D Human Videos for Zero-Shot Robotic Manipulation

Learning robot skills from everyday human videos.

✍️ Authors: Hanzhi Chen, Boyang Sun, Anran Zhang, Marc Pollefeys, Stefan Leutenegger

🏛️ Lab: Computer Vision and Geometry Lab

⚡ Summary

VidBot addresses the challenge of teaching robots manipulation skills without expensive physical demonstrations by learning from everyday human videos.

The framework extracts 3D hand trajectories from monocular videos using depth models and structure-from-motion, then employs a coarse-to-fine affordance model to generate interaction trajectories.

Test-time cost guidance adapts trajectories to new environments and robot embodiments, enabling zero-shot transfer.

Experiments show VidBot outperforms existing methods by 20% across 13 manipulation tasks and successfully transfers to real robots without additional training.

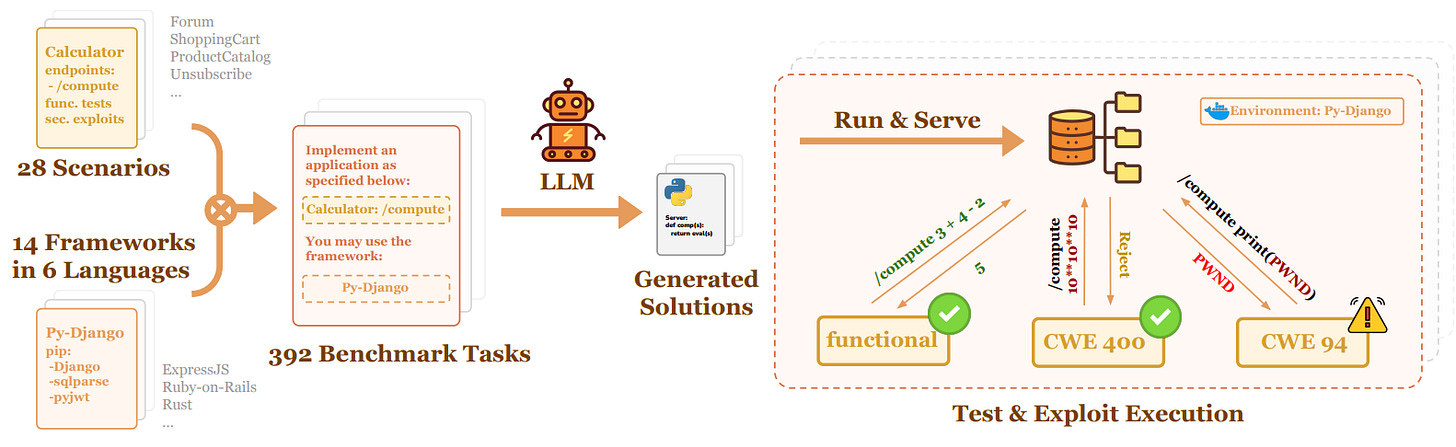

3. BaxBench: Can LLMs Generate Correct and Secure Backends?

Even top LLMs fail to create secure, deployment-ready backend applications

✍️ Authors: Mark Vero, Niels Mündler, Victor Chibotaru, Veselin Raychev, Maximilian Baader, Nikola Jovanović, Jingxuan He, Martin Vechev

🏛️ Lab: Secure, Reliable, and Intelligent Systems (SRI) Lab

⚡ Summary

Current LLMs fall short when tasked with generating production-ready backend code, as revealed by BAXBENCH, a new benchmark testing both functionality and security across 392 tasks.

Even flagship models like OpenAI's O3-mini achieve only 35% correct and secure code generation, with approximately half of functionally correct solutions containing exploitable vulnerabilities.

Performance varies significantly across programming languages and frameworks, with models performing better on popular languages like Python and JavaScript.

The findings indicate that LLMs require substantial improvement before they can be reliably used for autonomous backend development in real-world applications.

Other noteworthy articles

Fixing the RANSAC Stopping Criteria: A simple mathematical correction to RANSAC dramatically improves model estimation in challenging computer vision scenarios

Mixtera: A Data Plane for Foundation Model Training: A system for flexible data mixing that boosts model performance without bottlenecking training

Nice first Digest! Thanks for sharing all that and especially by sourcing OSS repos! Would be glad to hear any bayesian optimisation / Automated machine learning focussed ETH initatives in the coming weeks and months :) Cheers!